Concurrency

Concurrency is about executing multiple threads at once, either on a single core or multiple cores.

There are broadly two styles of concurrency:

- communicate by sharing memory

- share memory by communicating (popularized by Go)

Shared Memory

This is the more popular form of concurrency that involves sharing memory regions among multiple threads. We need to be able to guard those regions of memory to ensure exclusive access by guaranteeing mutual exclusion

Note: To understand implementation details of mutexes look at the atomics section below

Mutexes

Mutexes ensure exclusive access to a shared resource in a multi-threaded environment. A thread locks a mutex before accessing a shared resource and unlocks it after. Example:

pthread_mutex_lock(&mutex);

// Critical section

pthread_mutex_unlock(&mutex);

Condition Variables

Condition variables allow threads to wait for certain conditions. They’re used with mutexes to synchronize thread execution. Example:

pthread_cond_wait(&cond, &mutex); // Wait

pthread_cond_signal(&cond); // Signal another thread

Semaphores

Semaphores manage access to shared resources, allowing multiple accesses. They’re particularly useful in signal handlers. Example:

sem_wait(&sem); // Decrement and wait if zero

sem_post(&sem); // Increment, potentially unblocking a waiter

Atomics

Atomics are a complicated low-level topic that form the foundation on top of which mutexes are built.

Atomic operation is an indivisible operation. There are different types of atomics in C++ like std::atomic_flag and std::atomic

Operations In Atomics

load()store()exchange()compare_exchange_weak()compare_exchange_strong()

Memory Order Operations

memory_order_relaxedmemory_order_acquirememory_order_consumememory_order_acq_relmemory_order_releasememory_order_seq_cst

Each atomic operation has a certain subset of memory orderings that can be specified for it. Atomic operations combined with memory ordering can create certain relationships.

For a small set of implementations using atomics in Rust, see here

Communicating Memory

This is the style of concurrent programming popularized by Go. It’s safer due to not locking regions of memory, thus avoiding classic problems like deadlocks. This style is also called Communicating Sequential Processes (CSP).

package main

import (

"fmt"

"time"

)

func worker(done chan bool) {

fmt.Print("working...")

time.Sleep(time.Second)

fmt.Println("done")

done <- true

}

func main() {

done := make(chan bool, 1)

go worker(done)

<-done

}

The main thread blocks until the value of done is set to true.

MPSC in Rust is an example of Communicating Sequential Processes. The core principle of CSP is, “don’t communicate by sharing memory, share memory by communicating”.

CPS (Continuation Passing Style)

An alternative to CSP above is called CPS which is a complicated term for passing callbacks to functions. JS is a popular example of this.

function fetchData(url, callback) {

// Simulating an asynchronous API call

setTimeout(() => {

const data = { id: 1, name: "John Doe" };

callback(data);

}, 1000);

}

function processData(data) {

console.log("Processing data:", data);

}

fetchData("<https://example.com/api>", processData);

Blog post detailing this more: https://matt.might.net/articles/by-example-continuation-passing-style/

Promises are a nicer version of this CPS paradigm.

step1(5)

.then(result1 => step2(result1))

.then(result2 => step3(result2))

.then(finalResult => console.log("Final result:", finalResult))

.catch(error => console.error("An error occurred", error));

Async Runtimes, Web Servers & Async IO

This section is a little specific to Rust. Async runtimes are about managing concurrency in the context of an application - a somewhat higher level than mutexes and CSP.

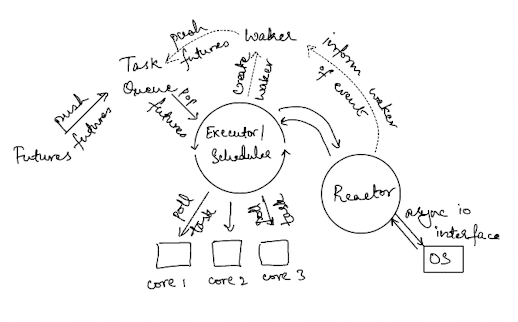

Roughly what they do

Any runtime has the following core concepts:

- Scheduler

- Tasks & Futures

- Wakers

- Reactors

Scheduler

The scheduler roughly combines:

- event loops

- task poller It is the “hot” loop of the entire async runtime.

Tasks & Futures

Futures (atleast in Rust) are anything that implement the Future trait.

struct SharedState {

completed: bool,

waker: Option<Waker>,

}

pub struct TimerFuture {

shared_state: Arc<Mutex<SharedState>>,

}

impl Future for TimerFuture {

type Output = ();

fn poll(

self: std::pin::Pin<&mut Self>,

cx: &mut std::task::Context<'_>,

) -> std::task::Poll<Self::Output> {

let mut shared_state = self.shared_state.lock().unwrap();

if shared_state.completed {

return Poll::Ready(());

}

shared_state.waker = Some(cx.waker().clone());

return Poll::Pending;

}

}

Wakers

A waker is an object that can be used to put the task/future back onto a run queue.

impl ArcWake for Task {

fn wake_by_ref(arc_self: &Arc<Self>) {

let arc_clone = Arc::clone(&arc_self);

arc_self.task_sender.send(arc_clone).unwrap();

}

}

Reactors

Reactors are components that can react to the readiness of events. These are connected to the async runtime and can enqueue tasks onto the scheduler, once they are ready.

Mechanisms typically include poll , epoll (on Linux) & kqueue (on Unix). These are typically used to signal readiness of sockets so that events can be read from them.

It’s important to discuss here the difference between the different types of async io models - readiness based & completion based.

epoll and kqueue fall under the readiness based model. These syscalls only indicate when a file descriptor is available to be read from or written to. There’s the overhead of an additional syscall to actually do the writing or reading in this case. io_uring on the other hand falls under the completion based model. This model involves providing the file descriptors you’re interested in reading from or writing to and getting the actual results back. It avoids the overhead of the additional syscall. One interesting difference is that it makes no sense to use the readiness based model when doing file I/O. It doesn’t mean anything to wait for file “readiness” in this case since files are always ready for I/O.

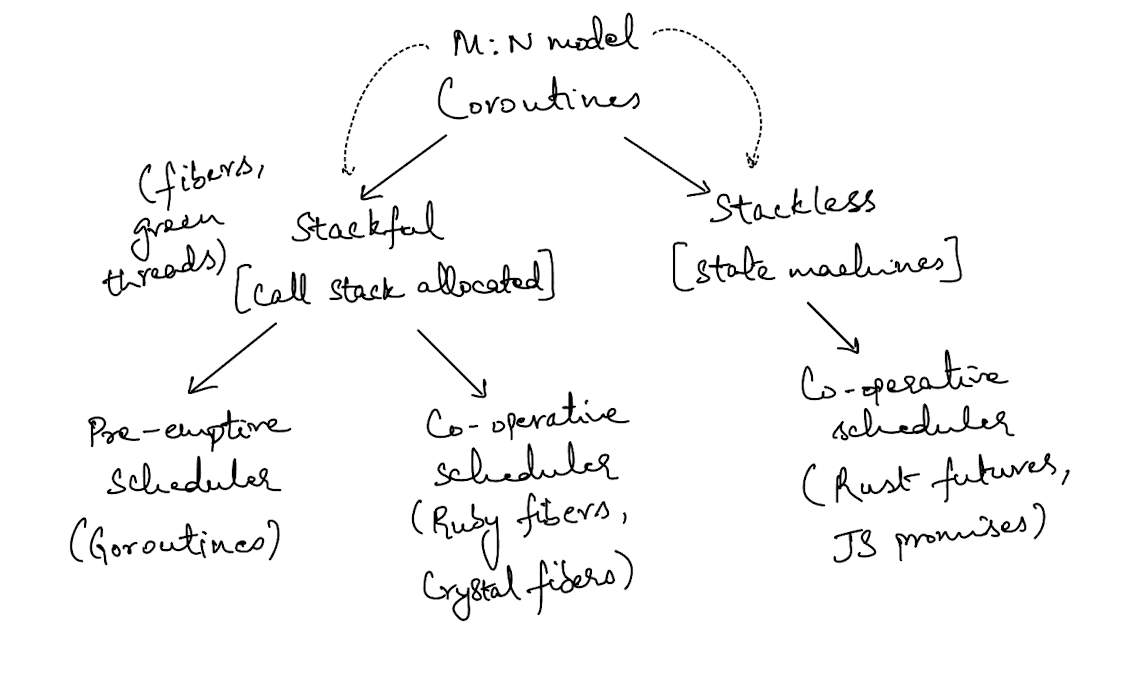

Cooperative & Preemptive Schedulers

It’s important to discuss these two terms when talking about async runtimes. To understand this, consider the following two classes of coroutines:

- Stackful (have an OS-like stack allocated) -> also called green threads, fibers etc.

- Stackless (no call stack allocated) -> also called futures, promises etc.

Stackful coroutines can be pre-empted by the scheduler. Stackless coroutines cannot be pre-empted by the scheduler.

Mix and match above two combinations. stackless + cooperative → Rust futures, JS promises stackful + preemptive → Goroutines stackless + preemptive → isn’t possible stackful + cooperative → need examples

Stackless coroutines require the rewiring of the language to use “coloured” functions. Stackful coroutines don’t involve this. Bob Nystrom blog post

Styles Of Runtimes

Broadly:

- single-threaded

- work-stealing

- thread-per-core

Resources

External

Atomics & Memory Ordering

- Understanding Atomics And Memory Ordering

- The memory order reference from cppreference

- Atomics on the GCC Wiki

- Memory Ordering At Compile Time

- Memory Barriers From The Linux Kernel Documentation

- ArangoDB’s blog on memory barriers in C++

- The Danger Of Atomic Operations

Sync & Async Web Servers

- How Async Await Works In Python

- Concurrent Servers Part I

- Rust Book Final Project - Web Server

- Async Rust Book Final Project

- Too Many Web Servers

Aysnc Runtimes

Schedulers

- Tokio Scheduler

- How Tokio Schedules Tasks

- InfluxDB Using Tokio For CPU Bound Tasks

- Scheduling Internals

Reactors / Async IO

Miscellaneous

- Async I/O In Depth Playlist

- GitHub Repo For Above Playlist

- Withoutboats on thread-per-core [paper referenced in post]

- Thread-Per-Core In Async Rust

- Phil’s tweet comparing runtime approaches